Tuesday, February 11, 2025

Posts History

Sunday, August 20, 2023

Automate Medical Coding Using BERT

Estimated reading time: 5'

- This piece doesn't serve as a primer or detailed account of transformer-based encoders, Bidirectional Encoder Representations from Transformers (BERT), multi-label classification or active learning. Detailed and technical information on these models is available in the References section. [ref 1, 3, 8, 12].

- The terms medical document, medical note and clinical notes are used interchangeably

- Some functionalities discussed here are protected intellectual property, hence the omission of source code.

Introduction

Autonomous medical coding refers to the use of artificial intelligence (AI) and machine learning (ML) technologies to automatically assign medical codes to patient records [ref 4]. Medical coding is the process of assigning standardized codes to diagnoses, medical procedures, and services provided during a patient's visit to a healthcare facility. These codes are used for billing, reimbursement, and research purposes.

By automating the medical coding process, healthcare organizations can improve efficiency, accuracy, and consistency, while also reducing costs associated with manual coding.

Challenges

- How to extract medical codes reliably, given that labeling of medical codes is error prone and the clinical documents are very inconsistent?

- How to minimize the cost of self- training complex deep models such as transformers while preserving an acceptable accuracy?

- How to continuously keep models up to date in production environment?

Extracting medical codes

- International Classification of Diseases (ICD-10) for diagnosis (with roughly 72,000 codes)

- Current Procedural Terminology (CPT) for procedures and medications (encompassing around 19,000 codes)

- Along with others like Modifiers, SNOMED, and so forth.

- The seemingly endless combinations of codes linked to a specific medical document

- Varied and inconsistent formats of patient records (in terms of terminology, structure, and length.

- Complications in gleaning context from medical information systems.

Minimizing costs

Keeping models up-to-date

Architecture

- Tokenizer to extract tokens, segments & vocabulary from a corpus of medical documents.

- Bidirectional Encoder Representations from Transformers (BERT) to generate a representation (embedding) of the documents [ref 3].

- Neural-based classifier to predict a set of diagnostic codes or insurance claim given the embeddings.

- Active/transfer learning framework to update model through optimized selection/sampling of training data from production environment.

fig. 2 Architecture for integration of AI components with external medical IT systems

Tokenizer

The effectiveness of a transformer encoder's output hinges on the quality of its input: tokens and segments or sentences derived from clinical documents. Several pressing questions need addressing:

- Which vocabulary is most suitable for token extraction from these notes? Do we consider domain-specific terms, abbreviations, Tf-Idf scores, etc.?

- What's the best approach to segmenting a note into coherent units, such as sections or sentences?

- How do we incorporate or embed pertinent contextual data about the patient or provider into the encoder?

- Terminology from the American Medical Association (AMA)

- Common medical terms with high TF-IDF scores

- Different senses of words

- Abbreviations

- Semantic descriptions

- Stems

- .....

BERT encoder

Context embedding

Segmentation

- Isolating the contextual data as a standalone segment.

- Integrating the contextual data into the document's initial segment.

- Embedding the contextual data into any arbitrarily chosen segment [Ref 6].

Transformer

- Grasp the contextual significance of medical phrases.

- Create embeddings/representations that merge clinical notes with contextual data.

- Pretraining on an extensive, domain-specific corpus [ref 8].

- Fine-tuning tailored for specific tasks, like classification [ref 9].

- Directly from the output of the pretrained model (document embeddings).

- During the fine-tuning process of the pretrained model. Concurrently, fine-tuning operates alongside active learning for model updates."\

Self-attention

The foundation of a transformer module is the self-attention block that processes token, position, and type embeddings prior to normalization. Multiple such modules are layered together to construct the encoder. A similar architecture is employed for the decoder.

Classifier

The network's structure, including the number and dimensions of hidden layers, doesn't have a significant influence on the overall predictive performance.

Active learning

- Selecting data samples with labels that deviate from the distribution initially employed during training (Active learning) [ref 12].

- Adjusting the transformer for the classification objective using these samples (Transfer learning)

References

[9] What Is Fine-Tuning and How Does It Work in Neural Networks?

[11] A Large Language Model for Electronic Health Records

[12] Towards data science: Active Learning in Machine Learning

Glossary

- Electronic health record (EHR): An Electronic version of a patients medical history, that is maintained by the provider over time, and may include all of the key administrative clinical data relevant to that persons care under a particular provider, including demographics, progress notes, problems, medications, vital signs, past medical history, immunizations, laboratory data and radiology reports.

- Medical document: Any medical artifact related to the health of a patient. Clinical note, X-rays, lab analysis results,...

- Clinical note: Medical document written by physicians following a visit. This is a textual description of the visit, focusing on vital signs, diagnostic, recommendation and follow-up.

- ICD (International Classification of Diseases): Diagnostic codes that serve a broad range of uses globally and provides critical knowledge on the extent, causes and consequences of human disease and death worldwide via data that is reported and coded with the ICD. Clinical terms coded with ICD are the main basis for health recording and statistics on disease in primary, secondary and tertiary care, as well as on cause of death certificates

- CPT (Current Procedural Terminology): Codes that offer health care professionals a uniform language for coding medical services and procedures to streamline reporting, increase accuracy and efficiency. CPT codes are also used for administrative management purposes such as claims processing and developing guidelines for medical care review.

He has been director of data engineering at Aideo Technologies since 2017 and he is the author of "Scala for Machine Learning" Packt Publishing ISBN 978-1-78712-238-3

Wednesday, November 9, 2022

Enhance Stem-Based BERT WordPiece Tokenizer

Estimated reading time: 4'

- Environments: Java 11, Scala 2.12.11

- To enhance the readability of the algorithm implementations, we have omitted non-essential code elements like error checking, comments, exceptions, validation of class and method arguments, scoping qualifiers, and import statements.

The Bidirectional Encoder Representations from Transformers (BERT) uses a tokenizer that address this issue.

WordPiece tokenizer

The purpose of the WordPiece tokenizer is to handle out-of-vocabulary words that have not been identified and recorded in the vocabulary. This tokenizer and any of its variant are used in transformer models such as BERT or GPT.

Here are the steps for processing a word using the WordPiece tokenizer, given a vocabulary:

1 Check whether the word is present in our vocabulary

1a If the word is present in the vocabulary, then use it as a token

1b If the word is not present in the vocabulary, then split the word

into sub-words

2 Check whether the sub-word is present in the vocabulary.

2a If the sub-word is present in the vocabulary, then use it as a token

2b If the sub-word is not present in the vocabulary, then split the

sub-word

3 Repeat from 2

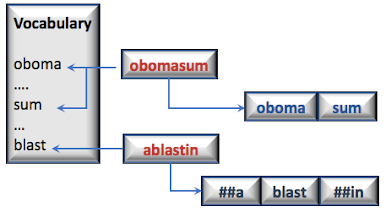

In the following example, two out of vocabulary words, obomasum and ablasting are matched against a vocabulary. The first word, obomasum is broken into two sub-words which each belong to the vocabulary. ablasting is broken into 3 sub-words for which only blast is found in the vocabulary.

Stem-based variant

Let's re-implement the WordPiece tokenizer using a pre-defined vocabulary of stems

Java implementation

The following implementation in Java can be further generalized by implementing a recursive method to extract a stem from a sub-word.

import java.util.ArrayList;

import java.util.List;

public class StemWordPieceTokenizer {

private int maxInputChars = 0;

public StemWordPieceTokenizer(int maxInputChars) {

this.maxInputChars = maxInputChars;

}

List<String> stemTokenizer(String sentence) {

List<String> outputTokens = new ArrayList<>();

String[] tokens = sentence.split("\\s+");

for(String token: tokens) {

// If the token is too long, ignore it if(token.length() > maxInputChars)

outputTokens.add("[UNK]");

// If the token belongs to the vocabulary else if(vocabulary.contains(token))

outputTokens.add(token);

else {

char[] chars = token.toCharArray();

int start = 0;

int end = 0;

while(start < chars.length-1) {

end = chars.length;

while(start < end) {

String subToken = token.substring(start, end);

// If the sub token is found in the vocabulary if(vocabulary.contains(subToken)) {

String prefix = token.substring(0, start);

// If the substring prior the token

// is also contained in the vocabulary if(vocabulary.contains(prefix))

outputTokens.add(prefix);

// Otherwise added as a word piece else if(!prefix.isEmpty()) outputTokens.add("##" + prefix); } outputTokens.add(subToken);

// Extract the substring after the token String suffix = token.substring(end);

if(!suffix.isEmpty()) { // If this substring is already in the vocabulary.. if (vocabulary.contains(suffix))

outputTokens.add(suffix);

else

outputTokens.add("##" + suffix);

}

end = chars.length;

start = end;

}

}

}

}

return outputTokens;

}

}

Scala implementation

For good measure, I include a Scala implementation.

def stemTokenize(sentence: String): List[String] = {

val outputTokens = ListBuffer[String]()

val tokens = sentence.trim.split("\\s+")

tokens.foreach(

token => {

// If the token is too long, ignore it

if (token.length > maxInputChars)

outputTokens.append("[UNK]")

// If the token belongs to the vocabulary

else if (vocabulary.contains(token))

outputTokens.append(token)

// ... otherwise attempts to break it down

else {

val chars = token.toCharArray

var start = 0

var end = 0

// Walks through the token

while (start < chars.length - 1) {

end = chars.length

while (start < end) {

// extract the stem

val subToken = token.substring(start, end)

// If the sub token is found in the vocabulary

if (vocabulary.contains(subToken)) {

val prefix = token.substring(0, start)

// If the substring prior the token

// is also contained in the vocabulary

if (vocabulary.contains(prefix))

outputTokens.append(prefix)

// Otherwise added as a word piece else if(prefix.nonEmpty)

outputTokens.append(s"##$prefix")

outputTokens.append(subToken)

// Extract the substring after the token

val suffix = token.substring(end)

if (suffix.nonEmpty) {

// If this substring is already in the vocabulary..

if (vocabulary.contains(suffix)) {

outputTokens.append(suffix)

// otherwise added as a word piece

} else if(suffix.nonEmpty)

outputTokens.append(s"##$suffix")

}

end = chars.length

start = chars.length

}

else

end -= 1

}

start += 1

}

}

}

)

outputTokens

}

Thank you for reading this article. For more information ...

He has been director of data engineering at Aideo Technologies since 2017 and he is the author of "Scala for Machine Learning" Packt Publishing ISBN 978-1-78712-238-3